I also added some more output from the tool, this time with the simpler version.

DEADLOCK IN JAVA CODE

I just updated the github example application to simplify the code and remove MessageDigest.

We've reproduced this without MessageDigest.getInstance(). Thanks for the work you all have done so far to look into this issue! I've got some comments/questions.ġ. Log_threadstack2 (Collected 10 minutes after the hang), still 999 threads are waiting for "pool-1-thread-405" and "pool-1-thread-405" is waiting for " 5687: "pool-1-thread-405" prio=5 Id=416 WAITING on i didn't collect the jstack output after 10 minutes of hang, this procedure itself require lot of time.ĭavid let me know, if i can be any help on this. Log_threadstack1 (Collected during the hang) shows 999 threads are waiting for "pool-1-thread-405" and "pool-1-thread-405" is runnable. Log_threadstack2 - Thread stackdump (Collected 10 minutes after the hang) Log_jstack_output - jstackoutput (Collected during the hang jhsdb jstack -mixed -pid) Log_threadstack1 - Thread stack dump (Collected during the hang) V SharedRuntime::complete_monitor_unlocking_C(oopDesc*, BasicLock*, JavaThread*)+0x3aĬouple of other observations with new test case shared by submitter. V ObjectMonitor::exit(bool, Thread*)+0x371 Native frames: (J=compiled Java code, A=aot compiled Java code, j=interpreted, Vv=VM code, C=native code) # V ObjectMonitor::exit(bool, Thread*)+0x371 # Java VM: Java HotSpot(TM) 64-Bit Server VM (14-internal+0-0614107.daholme., mixed mode, sharing, tiered, compressed oops, g1 gc, linux-amd64) # JRE version: Java(TM) SE Runtime Environment (14.0) (build 14-internal+0-0614107.daholme.) # guarantee(false) failed: Non-balanced monitor enter/exit! Likely JNI locking # A fatal error has been detected by the Java Runtime Environment: I changed a bunch of assertions to guarantees and got this: thread-131 sees the owner field representing thread-135 but things that address is part of its stack and so it has the monitor stack-locked! So now two thread "own" the monitor at the same time and chaos can ensue. So suppose pool-1-thread-135 actually owns the monitor when pool-1-thread-131 try to lock it. That address is also the address of a JavaThread! But that should be impossible as only one thread can ever stack-lock an Object! The second thread is:Ġx00007f75f85ef800 JavaThread "pool-1-thread-131" īut note that the "address" that indicated the Object was stack-locked by this thread is 0x00007f75d4092000 - the very end of its stack!Ġx00007f75d4092000 JavaThread "pool-1-thread-135" Then we see a second thread operating on the same (object,monitor) pair doing a similar transition on monitor_enter. Here was see one thread doing a monitor_exit and finding it had the monitor stack-locked, but now it has been inflated so it transitions to being locked by the thread reference. Also glibc malloc does not seem to have the problem.Ī breakthrough.

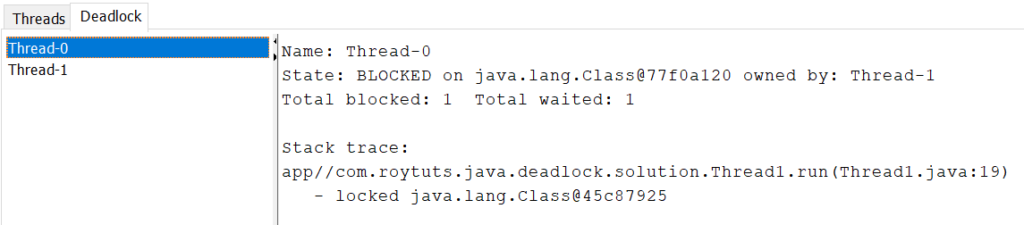

jemalloc 4.5 does not seem to cause the problem. So far we've worked around this by not upgrading to jemalloc 5.1. The application exits the loop early because of a timeout caused by the deadlock. The application should finish all iterations and not exit the loop early. STEPS TO FOLLOW TO REPRODUCE THE PROBLEM : The thread that the monitor references is parked in a threadpool waiting for its next task.Įxample log output when a deadlock occurred. We've run into an issue where our application deadlocks, and when looking at thread dumps we see that threads are waiting for an object monitor that no other thread is currently holding. We've tested on the following operating systems: Debian 8 and 9, and Ubuntu 16.04.Īnd these OpenJDK JVM runtimes: multiple versions of 1.8 between 1.8.0.121 and 1.8.0.202, 1.9, and 1.11.

0 kommentar(er)

0 kommentar(er)